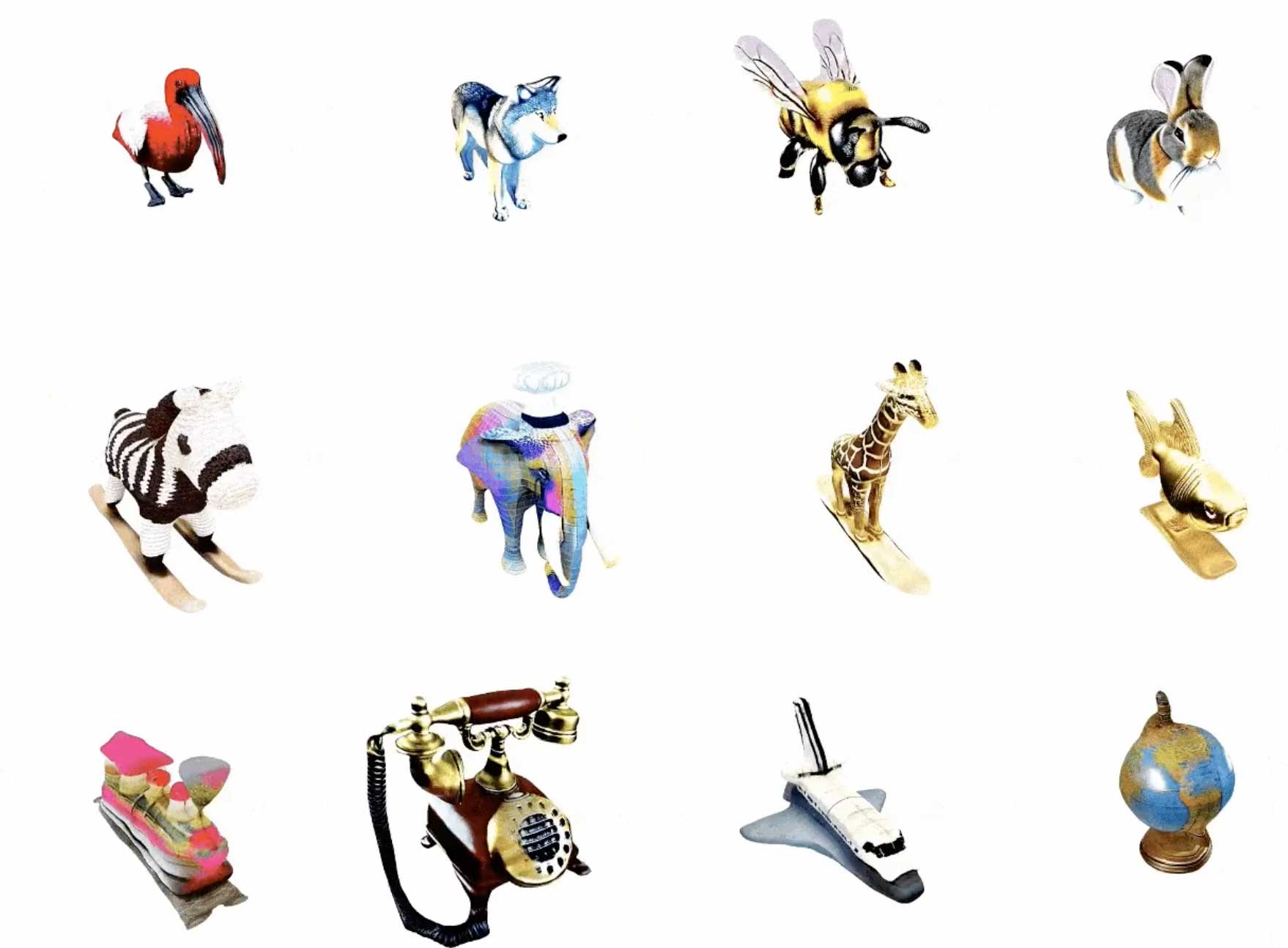

At the GTC conference, NVIDIA presented the LATTE3D model, a new type of generative AI that can convert text commands into 3D models. Within one second, LATTE3D generates photorealistic 3D objects from natural language input – ready for use in game development, design and robotics.

In contrast to existing text-to-3D systems such as MVDream or 3DTopia, LATTE3D delivers comparable or higher quality in just 400 milliseconds. This speed advantage promises enormous workflow optimizations in 3D-heavy industries. You can initially focus on speed instead of quality and then have a refined version generated later instead of having fixed settings from the outset. So far, LATTE3D has been trained on everyday objects and animals, but the model can be extended to other categories with new data sets.

The lightning-fast 3D generation from text descriptions could improve many workflows. Instead of searching through 3D asset libraries or modeling objects manually, a simple text input will soon be enough to import any 3D content into rendering applications.

Although LATTE3D is still in the research phase, NVIDIA is already planning an extension to generate 4D animations from text. For the time being, there is no announcement of a commercial product launch. Details of the work can be found directly at NVIDIA.

Subscribe to our Newsletter

3DPResso is a weekly newsletter that links to the most exciting global stories from the 3D printing and additive manufacturing industry.