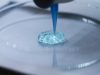

Researchers at Nvidia have presented Neuralangelo, an AI model that creates detailed 3D reconstructions from 2D video sequences. The software can faithfully recreate buildings, sculptures and other objects as 3D models.

According to Nvidia, Neuralangelo is able to transfer surface structures and materials such as roof tiles, glass or marble from 2D video to the 3D model with astonishing accuracy. The quality makes it easier for developers and creative professionals to create usable digital objects from smartphone video, it said.

Using multiple camera perspectives, the system analyzes an object’s shape, size and depth. This initially produces a rough 3D model, which is then refined in an optimization step.

In demos, Nvidia showed detailed reconstructions of Michelangelo’s statue of David, a truck, and the interiors and exteriors of buildings, among other things. The results can be further used for VR, digital twins or robotics.

According to the developers, Neuralangelo significantly outperforms previous approaches in mapping fine details and textures. The quality of the automatic 3D reconstruction could also be interesting for the creation of print data for 3D printing. Time-consuming manual 3D digitization of real objects would thus become superfluous.

AI-assisted 3D modeling directly from videos could facilitate many workflows. Time-consuming manual 3D digitization would become partly superfluous as a result. Nvidia’s approach shows how AI is also increasingly working for creative processes.

AI generators that deal with the creation of 3D models can be found here.

Subscribe to our Newsletter

3DPresso is a weekly newsletter that links to the most exciting global stories from the 3D printing and additive manufacturing industry.